AI in Asset Management-A Lightbulb Moment

This blog is generated by AI and the original article is published in https://www.man.com/maninstitute/AI-asset-management-lightbulb-moment

Welcome to our latest discussion on the transformative role of AI in asset management. In this article, we explore how technology is not just automating tasks but reshaping strategic investment decisions. 📈

Key Takeaways

- Electricity is one of the most powerful inventions in human history, transforming communication, transportation, industry, and entertainment.

- Artificial Intelligence (AI), especially generative AI, is having a similar transformative impact, creating new features that were previously unimaginable.

- At Man AHL, generative AI is being used for data augmentation, feature engineering, data extraction, and portfolio construction.

The Rise of Generative AI

The invention of the electric light bulb by Thomas Edison in 1879 took decades to achieve mass adoption. Similarly, AI technology has gone through a gradual process of acceptance and widespread application. Today, generative AI is rapidly changing various industries, including asset management.

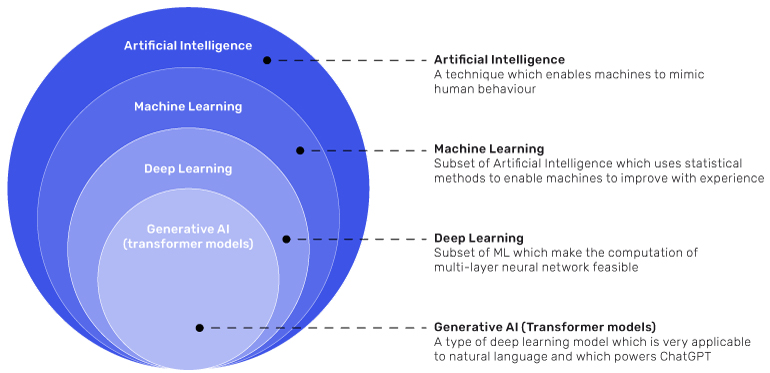

Generative AI is a subset of machine learning, which itself is a subset of broader AI. Generative AI enables users to interact with models using natural language and generate new outputs, significantly driving the recent excitement around AI.

Applications of Generative AI at Man AHL

At Man AHL, we find that while generative AI has not yet replaced researchers or portfolio managers, it has significantly boosted productivity, allowing quantitative analysts ('quants') to focus more on alpha generation. Here are four specific use cases:

1. Coding with Copilot

Tools like GitHub Copilot can accelerate the development of working prototypes and initial research results by predicting code continuations. This reduces development time and facilitates knowledge sharing. For example, developers can ask the AI to explain various parts of code written by others.

We are developing chatbots capable of understanding our internal code. For instance, one chatbot can identify where to find metadata for a market code and retrieve timeseries prices, specifying the correct libraries and fields, saving time. This capability enhances our efficiency and leverages our proprietary knowledge.

2. Extracting Information for Trading

Man AHL started as a commodity trading advisor (CTA) trading futures contracts. As the business has grown and diversified, we now trade more novel and exotic instruments like catastrophe bonds. Each catastrophe bond has unique features that need to be clearly understood before investment.

We are testing a process where data extraction is done by ChatGPT, putting the relevant information into a systematic template for review. This frees up an analyst's time to focus on new research.

3. Assisting with Investor Queries

Our Client Relations team handles various questions from clients about Man AHL's systematic investment strategies. Many questions require extracting information from different investment materials like factsheets, presentations, due diligence questionnaires, and investment commentaries.

ChatGPT can automate several steps in this process. First, it can extract the required information from relevant documents. Second, it can draft a response ready for human analyst review. This efficiency frees up time for the team to focus on higher-value tasks.

4. Analyzing Macro Data

In quantitative macro research, ChatGPT can serve as a hypothesis generator, suggesting whether a particular economic timeseries has a fundamentally justifiable relationship with a certain market. These hypotheses can then be tested using statistical back-testing methods.

While ChatGPT will not replace our macro research team in its current state, its understanding can be as good as a graduate researcher. The main difference is that a human researcher needs breaks, while ChatGPT can systematically query thousands of relationships and potentially suggest signals.

Lessons Learned

- Managing Hallucinations: ChatGPT's responses cannot be fully trusted. To mitigate hallucinations, we use tools to highlight where information occurs in the original text, aiding human checking.

- Prompt Engineering: If ChatGPT cannot perform a task well, it is often due to a misspecified prompt. Perfecting prompts requires significant resources, trial and error, and specific techniques.

- Breaking Down Tasks: ChatGPT cannot logically break down and execute complex problems in one go. Effective 'AI engineering' involves splitting projects into smaller tasks, each handled by specialist instances of ChatGPT with tailored prompts.

- Education for Wider Adoption: Understanding ChatGPT's capabilities and limitations is crucial. Skeptics should see its strengths, while enthusiasts need to learn its failures.

References

- Ajay Agrawal, A., Gans, J. and Goldfarb, A. ‘Power and Prediction: The Disruptive Economics of Artificial Intelligence’ (2022)

- Luk, M., ‘Generative AI: Overview, Economic Impact, and Applications in Asset Management’, 18 September, 2023. Available at: SSRN or DOI

- Ledford, A. ‘An Introduction to Machine Learning’, 2019. Available here

- Korgaonkar, R., ‘Diary of a Quant: AI’, 2024. Available here

- Pensions and Investments, ‘Man Group CEO sees generative AI boosting efficiency, but not investment decisions’, 30 April 2024. Available here

- Korgaonkar, R., ‘Diary of a Quant: Journeying into Exotic Markets’, 2024. Available here

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D. and Sutskever, I., ‘Language models are unsupervised multitask learners’, 2019. OpenAI blog, 1(8), p.9

- Eloundou, T., Manning, S., Mishkin, P. and Rock, D., 2023. ‘Gpts are gpts: An early look at the labor market impact potential of large language models’. arXiv preprint arXiv:2303.10130

- Bloomberg, Odd Lots podcast, ‘How Humans and Computers learn from each other’, 2 May 2024. Available here

For a deeper exploration, visit the original article on Man Group's website: AI in Asset Management: A Lightbulb Moment.